NervLand: Implementing the DeepWater Ocean model from Proland

In my recent work on implementing the DeepWater Ocean model from Proland, I encountered various challenges and made significant progress. Initially, I created a basic DeepWaterModel class and implemented support for generating specific spectra. As I delved deeper, I faced errors related to texture usage and format compatibility.

Despite some setbacks, I debugged my initial implementation, improving code efficiency and fixing errors. I also experimented with building dependencies like glew and glut from sources to enhance the project. Along the way, I recompiled demo apps and investigated issues with FFT computation and mipmap generation.

Furthermore, I worked on rendering the ocean grid accurately, ensuring proper horizon handling and investigating ocean space projection. To integrate ocean rendering with the planet, I devised strategies to handle depth rendering and achieved some success. However, challenges remained, such as discrepancies in lighting between projected grids and screen quads.

Despite these challenges, my progress has been steady, and I continue to refine the implementation for a more accurate and visually appealing ocean rendering.

Youtube video for this article available at:

References

Initial implementation steps

First I created a simple DeepWaterModel class. Next I implemented the support to generate the spectrum12 and spectrum34 just as in the reference implementation.

Next I'm implementating the support to generate the 3D slope variances texture. OK

Now, after generating the slopes variances texture, I'm supposed to download the texture data from GPU to compute on CPU the max value, but this got me thinking: could I not instead compute the max on the GPU and download a single value ? (this is not going to make any difference here since we just have 10x10x10 slope variances, but still I'm curious…)

Arrgghh.. crap, first thing I notice is this error:

2024-01-26 12:21:43.940274 [ERROR] Dawn: Validation error: The texture usage (TextureUsage::(TextureBinding|StorageBinding)) includes TextureUsage::StorageBinding, which is incompatible with the format (TextureFormat::R16Float). - While validating [TextureDescriptor]. - While calling [Device].CreateTexture([TextureDescriptor]).

Found this page on the topic: https://github.com/gpuweb/gpuweb/issues/513

⇒ Would seem that support for storage binding with r16float is optional on the vulkan backend ? Let's try the DX12 version. Aarrfff, no: actually I was already on DX12 😅 (and it's not better with vulkan). So for now it seems I need to write to an R32Float format since R16Float is not supported here (but RGBA16Float is by the way).

Arrghh, one step further now, but it seems that my computation of the total slope variance is going completely wrong. I have this code:

F32 totalSlopeVariance = 0.0;

F32 size1 = _traits.grid1Size;

F32 size2 = _traits.grid2Size;

F32 size3 = _traits.grid3Size;

F32 size4 = _traits.grid4Size;

half* spectrum12 = _spectrumData12.data();

half* spectrum34 = _spectrumData34.data();

for (int y = 0; y < _fftSize; ++y) {

for (int x = 0; x < _fftSize; ++x) {

U32 offset = 4 * (x + y * _fftSize);

F32 i =

(F32)(2.0 * nv::PI * (x >= _fftSize / 2 ? x - _fftSize : x));

F32 j =

(F32)(2.0 * nv::PI * (y >= _fftSize / 2 ? y - _fftSize : y));

totalSlopeVariance +=

getSlopeVariance(i / size1, j / size1, spectrum12 + offset);

totalSlopeVariance +=

getSlopeVariance(i / size2, j / size2, spectrum12 + offset + 2);

totalSlopeVariance +=

getSlopeVariance(i / size3, j / size3, spectrum34 + offset);

totalSlopeVariance +=

getSlopeVariance(i / size4, j / size4, spectrum34 + offset + 2);

}

}

And this will produce the outputs:

2024-01-26 13:06:19.527269 [NOTE] Found slope delta: -391437400000000 2024-01-26 13:06:19.527288 [NOTE] Theoretical Slope: 0.03191719 2024-01-26 13:06:19.527291 [NOTE] Total Slope: 391437400000000

So, let's see what this is about… 🤔 Hmmm, that's fun: I reproduced about exactly the same code in a unit test, and there its working fine… my my my 🤣 I must be doing something really stupid here.

Arrff! found it! It was those lines where I really need to convert the FFT Size from U32 to I32:

float i = 2.0 * PI * (x >= _fftSize / 2 ? x - (I32)_fftSize : x);

float j = 2.0 * PI * (y >= _fftSize / 2 ? y - (I32)_fftSize : y);

And now about my initial idea: “could I not instead compute the max on the GPU and download a single value ?”: That would really require a reduction so more compute passes, and we don't want that here.

Building glew ang glut from sources

Trying to see if I could compile the OceanLighting app from sources. I would need glew and glu in 64bits version.

⇒ Found this page to download binaries for glew: https://glew.sourceforge.net/

And for freeglut I just added a simple builder and everything when just fine 😆.

Trying to rebuild the OceanLighting demo app

Arrggghh… Just realized I will need support for AntTweakBar too. Let's see… OK

And after some additional tweaking, here we are! I got the OceanLighting demo app recompiled in 64bit arch:

Next, let's try to do the same with the OcenLightingFFT example code.

Hmmm, okay… I could rebuild the FFT demo… but it doesn't work out of the box 😶. I get the sky displayed properly but no ocean grid

Feeeww! Hopefully I could find a working version from this repo: https://github.com/jjuiddong/OceanLightingFFT/tree/master

Implementing the FFT Compute pass for wave simulation

Important note: I added the following BindEntry generator:

auto stoTex2dArray(wgpu::Texture texture, Bool write, Bool read) -> BindEntry {

auto entry = stoTex2d(std::move(texture), write, read);

entry.texture_dim = wgpu::TextureViewDimension::e2DArray;

entry.texture_num_layers = 0;

return entry;

}

⇒ One thing to keep in mind here is that I needed to set the texture_num_layers value otherwise, we will just write to one layer. And clearly, this is someting I will need to keep in mind when I need to write to some specific mip level!

I then also added support to write the FFT A texture content to disk, and then compared it if the reference content:

⇒ The textures don't seem to match properly but they are very similar, so for now I will consider this is good enough.

Implementing support for mipmap generation

OK: was relativel easy in the end: will make a video about it

Working on initial ocean grid display

Investigations on ocean space projection

In the reference glsl code we start with:

float t;

vec3 cameraDir;

vec3 oceanDir;

vec2 u = oceanPos(vertex, t, cameraDir, oceanDir);

The definition of the function is:

vec2 oceanPos(vec3 vertex, out float t, out vec3 cameraDir, out vec3 oceanDir) {

float horizon = horizon1.x + horizon1.y * vertex.x - sqrt(horizon2.x + (horizon2.y + horizon2.z * vertex.x) * vertex.x);

cameraDir = normalize((screenToCamera * vec4(vertex.x, min(vertex.y, horizon), 0.0, 1.0)).xyz);

oceanDir = (cameraToOcean * vec4(cameraDir, 0.0)).xyz;

float cz = oceanCameraPos.z;

float dz = oceanDir.z;

if (radius == 0.0) {

t = (heightOffset + 5.0 - cz) / dz;

} else {

float b = dz * (cz + radius);

float c = cz * (cz + 2.0 * radius);

float tSphere = - b - sqrt(max(b * b - c, 0.0));

float tApprox = - cz / dz * (1.0 + cz / (2.0 * radius) * (1.0 - dz * dz));

t = abs((tApprox - tSphere) * dz) < 1.0 ? tApprox : tSphere;

}

return oceanCameraPos.xy + t * oceanDir.xy;

}

On the code side (in “DrawOceanFFTTask”), the vertices are added in clip coordinates:

for (int i = 0; i < NY; ++i) {

for (int j = 0; j < NX; ++j) {

o->screenGrid->addVertex(vec2f(2.0*f*j/(NX-1.0f)-f, 2.0*f*i/(NY-1.0f)-f));

}

}

Alright, next we have this “horizon” computation thing…

In the code we have this:

if (o->horizon1U != NULL) {

float h = oc.z;

vec3d A0 = (ctoo * vec4d((stoc * vec4d(0.0, 0.0, 0.0, 1.0)).xyz(), 0.0)).xyz();

vec3d dA = (ctoo * vec4d((stoc * vec4d(1.0, 0.0, 0.0, 0.0)).xyz(), 0.0)).xyz();

vec3d B = (ctoo * vec4d((stoc * vec4d(0.0, 1.0, 0.0, 0.0)).xyz(), 0.0)).xyz();

if (o->radius == 0.0) {

o->horizon1U->set(vec3f(-(h * 1e-6 + A0.z) / B.z, -dA.z / B.z, 0.0));

o->horizon2U->set(vec3f::ZERO);

} else {

double h1 = h * (h + 2.0 * o->radius);

double h2 = (h + o->radius) * (h + o->radius);

double alpha = B.dotproduct(B) * h1 - B.z * B.z * h2;

double beta0 = (A0.dotproduct(B) * h1 - B.z * A0.z * h2) / alpha;

double beta1 = (dA.dotproduct(B) * h1 - B.z * dA.z * h2) / alpha;

double gamma0 = (A0.dotproduct(A0) * h1 - A0.z * A0.z * h2) / alpha;

double gamma1 = (A0.dotproduct(dA) * h1 - A0.z * dA.z * h2) / alpha;

double gamma2 = (dA.dotproduct(dA) * h1 - dA.z * dA.z * h2) / alpha;

o->horizon1U->set(vec3f(-beta0, -beta1, 0.0));

o->horizon2U->set(vec3f(beta0 * beta0 - gamma0, 2.0 * (beta0 * beta1 - gamma1), beta1 * beta1 - gamma2));

}

}

but to understand that we first need to understand how we compute the camera to ocean matrix (ctoo):

vec3d ux, uy, uz, oo;

if (o->radius == 0.0) {

// flat ocean

ux = vec3d::UNIT_X;

uy = vec3d::UNIT_Y;

uz = vec3d::UNIT_Z;

oo = vec3d(cl.x, cl.y, 0.0);

} else if (o->radius > 0.0) {

// spherical ocean

uz = cl.normalize(); // unit z vector of ocean frame, in local space

if (o->oldLtoo != mat4d::IDENTITY) {

ux = vec3d(o->oldLtoo[1][0], o->oldLtoo[1][1], o->oldLtoo[1][2]).crossProduct(uz).normalize();

} else {

ux = vec3d::UNIT_Z.crossProduct(uz).normalize();

}

uy = uz.crossProduct(ux); // unit y vector

oo = uz * o->radius; // origin of ocean frame, in local space

} else {

// cylindrical ocean

uz = vec3d(0.0, -cl.y, -cl.z).normalize();

ux = vec3d::UNIT_X;

uy = uz.crossProduct(ux);

oo = vec3d(cl.x, 0.0, 0.0) + uz * o->radius;

}

mat4d ltoo = mat4d(

ux.x, ux.y, ux.z, -ux.dotproduct(oo),

uy.x, uy.y, uy.z, -uy.dotproduct(oo),

uz.x, uz.y, uz.z, -uz.dotproduct(oo),

0.0, 0.0, 0.0, 1.0);

// compute ctoo = cameraToOcean transform

mat4d ctoo = ltoo * ctol;

Actually, even before that, we have a section where we check the altitude of the camera, and we simply don't render the ocean when above that altitude value:

/* Check if we need to reset the oldLocalToOcean matrix/offset:*/

auto radius = _desc.radius;

auto zmin = _desc.zmin;

if ((radius == 0.0 && camLocalPos.z() > zmin) ||

(radius > 0.0 && camLocalPos.length() > radius + zmin) ||

(radius < 0.0 && camLocalPos.yz().length() < -radius - zmin)) {

_prevLocalToOcean.make_identity();

_positionOffset.set(0.0, 0.0, 0.0);

return;

}

Thing is, in my case, I already have a RenderBundle created for the ocean and passed to the render pass: so I may need to toggle the encoding of that bundle then 🤔?

Okay, and now back to the horizon:

As far as I understand it now, the following 2 lines are used to compute the horizon of the planet in scree space for any given x position:

float horizon = horizon1.x + horizon1.y * vertex.x - sqrt(horizon2.x + (horizon2.y + horizon2.z * vertex.x) * vertex.x);

cameraDir = normalize((screenToCamera * vec4(vertex.x, min(vertex.y, horizon), 0.0, 1.0)).xyz);

⇒ I basically don't understand much of the calculations but let's just accept that for the moment

OKAY: for the first run test I got a completely messed up display

ux = VEC3D_ZAXIS.cross(uz).normalized();

But after fixing this I got the proper horizon handling:

Next, here are the first deformations I got witha working application of the fft texture:

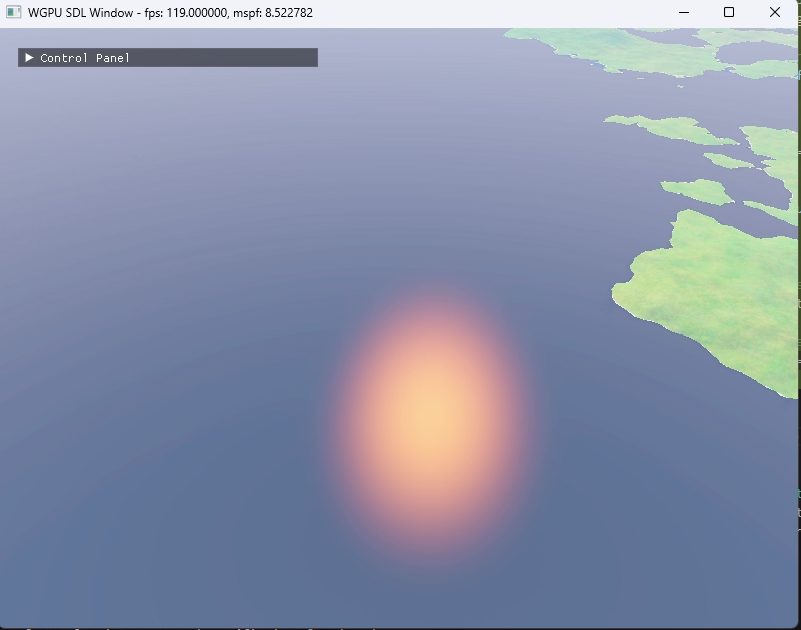

And next this is the first display I got trying to process only the sun irradiance part:

… Pretty desappointing 😅. But let's keep digging anyway.

okay, so… adding all the 3 lighting components only produced really poor results: so this means I have a bug somewhere 😭, and it's time to investigate what's going wrong step by step.

Debugging our initial implementation

⇒ I'm going to use the working OceanLightingFFT example I have as a reference for this debugging process, after all, I have not compiled the Proland ocean version, so I'm not absolutely sure the version I have is OK.

First change: when sampling the slope variance texture we need to use a Linear/ClampToEdge sampler (not a Nearest sampler!)

Also, I just realized that in the OceanLightingFFT sample, the slope variance texture has 2 channels instead of just one 😲! That sounds like a pretty significant difference.

Moving to try to build Ork/Proland from sources

….Yeahh…. that's how desperated I am lol. Trying to build Ork/proland from sources. And first issue on the road: I need pthreads on windows 😒.

I first considered this repository as a starting point: https://github.com/LarsFlaeten/ork/

But finally realized that in the official repo we already had a Cmake build system to so I decided to stick to https://gitlab.inria.fr/neyret/ork.git

⇒ OK Setting up the build for ORK was relatively easy.

Then the examples are not really working.

But the unit testing app seems to be working fine when we run test_ork FORK:

So now time to try compiling Proland itself ??

⇒ Learnt a new thing here: the Ork library uses a lot of static resources ⇒ we loose them when linking a static library. That's where the cmake OBJECT library type come into play

Note: according to the INSTALL page (https://github.com/zhongnanshan/proland-git/blob/master/INSTALL.TXT) proland depends on glew 1.5.6, so let's try that version. Arrff, that didn't really help.

Now trying with the resources from this repo: https://github.com/weiou063374/proland-windows

OK: I could eventually build the Ork library and a significant part of Proland from sources! Currently I stopped that process because I have all the elements I need to continue the investigations on the Ocean rendering, but that was a very good step to take in the end! (now I will have working references for the other Proland plugins)

Resuming investigations on Ocean rendering

Back to the ocean rendering implementation: I noticed something strange when using F16 format: I don't seem to get a proper match on simple content from the slope variance texture 🤔, strange…

⇒ I eventually figured out that the mismatch comes from the line:

slopeVariance += getSlopeVariance(k / GRID_SIZES.w, A, B, C, spectrum34.zw);

And that would be because we get a reasonably large “k” vector provided as input in getSlopeVariance, where we use the exp() function… It seems that's never too good for stability.

Now I'm back in the render_ocean shader, and noticing that I'm not doing this part of the computation right:

vec3 sunL;

vec3 skyE;

vec3 extinction;

sunRadianceAndSkyIrradiance(earthP, N, oceanSunDir, sunL, skyE);

For that I tried to reuse the following atmosphere function directly, but the results are not the same:

var sky_irradiance: vec3f = vec3f(0.0);

// var sun_irradiance: vec3f = GetSunAndSkyIlluminance(earthP / 1000.0, N, params.oceanSunDir.xyz, &sky_irradiance);

var sun_irradiance: vec3f = GetSunAndSkyIrradiance(earthP / 1000.0, N, params.oceanSunDir.xyz, &sky_irradiance);

⇒ So time for deeper investigations now. OK: made some progress.

And next thing I noticed is that I'm using a “skyIrradianceSampler” in the reference code below:

/* incident sky light at given position, integrated over the hemisphere (irradiance) */

// r=length(x)

// muS=dot(x,s) / r

vec3 skyIrradiance(float r, float muS) {

#if defined(ATMO_SKY_ONLY) || defined(ATMO_FULL)

return irradiance(skyIrradianceSampler, r, muS) * SUN_INTENSITY;

#else

return vec3(0.0);

#endif

}

⇒ I doubt this is jut the irradiance texture computed for the atmosphere 🤔… Well, not this is really it. But then I noticed that if I remove the SUN_INTENSITY factor in my code I will get back to some correct display 🥳! So I'm now just using:

fn skyIrradiance(r: f32, muS: f32) -> vec3f {

return GetIrradiance(r, muS);

}

OK! So I finally have my ocean rendering merged with the planet rendering itself. That's great 🥳! But unfortunately, there is still some kind of artefact in the display due to how I select between ocean or ground pixel:

I made some more progress on the display but then got this artefact when I started rendering the underwater terrain with correct depths:

⇒ What I'm realizing now is that this is because I'm not writing the depth values in the ocean rendering pass, but then in the atmosphere rendering pass I'm using that depth value to compute the atmosphere scattering effect. Not too good 🫣.

I could also write the depth in the ocean without clearing the previous content maybe 🤔? Would that do the trick ? It's worth trying at least.

Handle ocean grid display from space appropriately

First attempt

First, we should add a uniform flag to decide if we displace the vertices or not. Then we use that flag in the vertex shader to either project the vertices or keep the screen aligned quad.

Even better: instead of using all the vertices if we are above a given altitude we should use only the first 3 vertices to draw a triangle on screen and then degenerate the remaining vertices outside of the screen.

In case we draw the screen aligned triangle, in the fragment shader we also receive the camera view ray, so we use that to find the intersection with the earth sphere.

Then we check the currently written depth for that pixel if we have an intersection. And from there we should be able to perform the ocean computation as before.

And in fact, this doesn't work:

If I were to draw a screen aligned quad, while still writing the depth value, I would loose all the depth information I have for the planet 😭. So I would need to toggle the writing to the depth buffer which means a different render node.

Second attempt

Alright, so, next idea now:

I could render the planet to color + depth, then pass those color + depth texture to the ocean rendering on a secondary pass, writing to 2 color attachments color2 + cam_dist32f and not writing to a depth attachment.

This way, whether we are projecting the grid or rendering the screen aligned quad, we will be responsible for explicitly writing a depth value for our pixels, and we can then use those 2 inputs to render the atmosphere.

⇒ let's give this a try.

arrff, got an error in this process:

2024-02-08 20:56:44.536824 [ERROR] Dawn: Validation error: Color blending srcfactor (BlendFactor::SrcAlpha) or dstFactor (BlendFactor::OneMinusSrcAlpha) is reading alpha but it is missing from fragment output. - While validating targets[1]. - While validating fragment state. - While calling [Device].CreateRenderPipeline([RenderPipelineDescriptor]).

But I know where this comes from: I should disable blending for the second color attachment of the ocean render pass

_renderNode->set_blend_mode(BLEND_DISABLED);

Next error on the line:

2024-02-08 21:01:20.632086 [ERROR] Dawn: Validation error: The sample type in the shader is not compatible with the sample type of the layout. - While validating that the entry-point's declaration for @group(0) @binding(6) matches [BindGroupLayout] - While validating the entry-point's compatibility for group 0 with [BindGroupLayout] - While validating fragment stage ([ShaderModule], entryPoint: main_fs). - While validating fragment state. - While calling [Device].CreateRenderPipeline([RenderPipelineDescriptor]).

Let's see 🤔… OK, I was still using a texture_depth_2D binding in the atmosphere pass (while I need a texture_2d<f32> instead), so fixed as this:

// @group(0) @binding(6) var depth_texture : texture_depth_2d; @group(0) @binding(6) var depth_texture : texture_2d<f32>;/

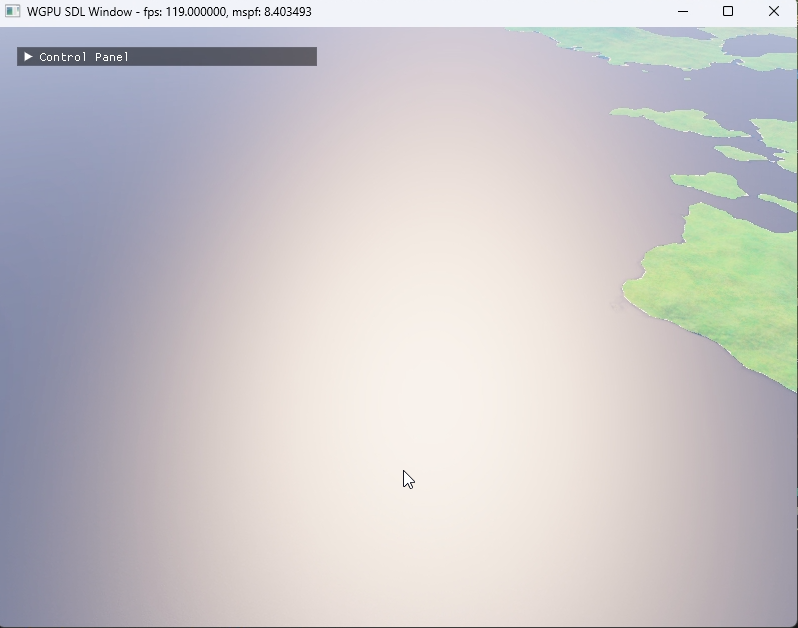

⇒ So this worked a little bit better, but unfortunately, since I had to process the fragment differently in the screen quad case, I could not reproduce the exact same lighting:

Here is the display with screen quad:

Here is the display as soon as we switch to projected grid:

For reference here are the part of code I changed for that experiment in render_ocean.wgsl:

struct VertexOutput {

@builtin(position) Position: vec4f,

@location(0) oceanU: vec2f,

@location(1) oceanP: vec3f,

@location(2) camDist: f32,

@location(3) viewRay: vec3f,

@location(4) coords: vec2f,

@location(5) ray: vec3f,

}

fn generateScreenQuad(input: StdVsInput) -> VertexOutput {

var output: VertexOutput;

// We only use the first 3 vertices to produce a screen quad:

if input.vertexID < 3 {

var uv: vec2f = get_screen_triangle_pos(input.vertexID);

// Note that we have **NO** ocean position to provide here.

var coords: vec2f = uv * 2.0 - 1.0;

// We can simply write a screen aligned quad position:

var pos: vec4f = vec4f(coords, 0.0, 1.0);

output.Position = pos;

// UV coords and view ray in camera space:

output.coords = uv;

var unProjected: vec4f = get_proj_mat_inverse(camParams) * pos;

output.viewRay = unProjected.xyz;

// ray in world space:

output.ray = (get_view_mat_inverse(camParams) * vec4(unProjected.xyz, 0.0)).xyz;

} else {

// Degenerated vertices:

output.Position = vec4f(2.0, 2.0, -1.0, 1.0);

}

return output;

}

fn computeOceanOnScreen(in: VertexOutput, camDist: ptr<function, f32>) -> vec3f {

// We have the ray in the world space and the camera position in world:

var origin: vec3f = camParams.camWorldPos.xyz / kLengthUnitInMeters;

var ray: vec3f = normalize(in.ray);

// Compute the intersection with the earth surface:

var hit = vec4f(0.0);

if ray_sphere_intersect(origin, ray, vec3f(0.0), ATMO.bottom_radius, &hit) {

// We have a hit with the earth

*camDist = hit.w * kLengthUnitInMeters;

// earth Point (in km)

var earthP: vec3f = hit.xyz;

var N: vec3f = normalize(earthP);

var V: vec3f = normalize(origin - earthP);

var sky_irradiance: vec3f = vec3f(0.0);

var sun_irradiance: vec3f = sunRadianceAndSkyIrradiance(earthP, N, params.worldSunDir.xyz, &sky_irradiance);

var sigmaSq: f32 = textureSampleLevel(slopeVarianceTex, slopeSampler, vec3f(0.0, 0.5, 0.0), 0).x;

sigmaSq = max(sigmaSq, 2e-5);

// sigmaSq = 2e-5;

sky_irradiance = vec3f(0.5, 0.5, 0.5);

var Lsun: vec3f = wardReflectedSunRadiance(params.worldSunDir.xyz, V, N, sigmaSq) * sun_irradiance;

// Lsun = vec3f(0.0);

var Lsky: vec3f = meanFresnel(V, N, sigmaSq) * sky_irradiance / PI;

var Lsea: vec3f = refractedSeaRadiance(V, N, sigmaSq) * params.seaColor.xyz * sky_irradiance / PI;

var surfaceColor: vec3f = Lsun + Lsky + Lsea;

var finalColor: vec3f = hdr(surfaceColor);

return finalColor;

}

return vec3f(1.0);

}

// Proceed differently if we are displacing the vertices or not:

var oceanColor: vec3f = vec3f(0.0);

var cameraDistance: f32 = -1.0;

if grid.displace_vertices == 1 {

// Now compare our current fragment distance to the camera with the value we just read from the base pass

// depth buffer. If our distance is smaller it means this ocean pixel should be shown, and

// otherwise, our ocean pixel is below the ground, so we keep displaying the ground color:

oceanColor = computeOceanSurface(in);

cameraDistance = in.camDist;

} else {

oceanColor = computeOceanOnScreen(in, &cameraDistance);

}

// Get the ground color:

var color_uv: vec2f = vec2f(in.coords.x, 1.0 - in.coords.y) * basePass.color0_uv_scale;

// var color_uv: vec2f = in.coords.xy * basePass.color0_uv_scale;

// var color: vec3f = textureSampleLevel(albedo_texture, albedoSampler, color_uv, 0).xyz;

var cdata: vec4f = textureSampleLevel(albedo_texture, albedoSampler, color_uv, 0);

var color: vec3f = cdata.xyz;

// Note: we only update the color if the cameraDistance is positive,

// which might not be the case on the computeOceanOnScreen() path;

if cameraDistance >= 0.0 && ((cameraDistance < dist) || (cdata.w > 0.0)) {

// This is a water pixel.

// So we modulate the color with the ocean color,

// First selecting the water depth to use:

// Note: we use a scale factor of 25.0 in the planet_ortho shader when

// writting the w component of the color data:

var water_depth = max((dist - cameraDistance) + 1.0, cdata.w * 25.0);

// Using some kind of "Beer-Lambert Law" here:

var alpha: f32 = 0.03;

color = mix(oceanColor, color * oceanColor, exp(-alpha * water_depth));

// Update the depth value that we should write back:

var point: vec3f = normalize(in.viewRay) * cameraDistance;

depth = linear_depth_to_logz(camParams, point.z);

// color = oceanColor;

}

Third attempt

Let's push the experiment in the 2nd attempt a bit further: we should still process the vertices differently, but this time instead of displaying a single screen quad, let's try to reuse all the vertices and project them on the earth to always match the earth shape in the view.

OK! this time this worked pretty well and I could get the grid displaced to cover the earth. So here is a view from space where that grid is used:

For reference, here is the code I wrote to compute the “earth pointer frame” and the “earth disk size” in the camera space:

Vec3d earthCamPos = localToCamera.get_trans();

Vec3d earthCamDir = earthCamPos.normalized();

F64 h2 = earthCamPos.length();

F64 R = _desc.radius;

F64 h_div_R = h2 / R;

// var d: f32 = sqrt(h * h - R * R);

// var d: f32 = h * sqrt(1.0 - (R / h) * (R / h));

F64 d_div_h = std::sqrt(1.0 - (R / h2) * (R / h2));

F64 alpha_div_R = std::sqrt(1.0 - d_div_h * d_div_h);

Vec3d rightDir = earthCamDir.cross(Vec3d(0.0, 1.0, 0.0)).normalized();

Vec3d upDir = rightDir.cross(earthCamDir);

// Store the earth size uniform:

oceanDat.earthSize = (F32)(d_div_h / (h_div_R - alpha_div_R));

Mat4d earthToCam{rightDir.x(),

upDir.x(),

earthCamDir.x(),

0.0,

rightDir.y(),

upDir.y(),

earthCamDir.y(),

0.0,

rightDir.z(),

upDir.z(),

earthCamDir.z(),

0.0,

0.0,

0.0,

0.0,

1.0};

oceanDat.earthPointerToCamera.set(earthToCam);

oceanDat.cameraToEarthPointer.set(earthToCam.inverse());

Update earth grid taking the projection corners into account

When we are only displaying part of the Earth in our view, it would also be good if we could only display the vertices in the visible part of the earth. Effectively stretching the initial grid on the part of earth that we want to cover. Let's see how we can do that properly.

[crunching… crunching…] okay… so this took a long while, and I lost track of what I was noting down here 🤣

But anyway, now we have a somewhat working ocean rendering process so I'm moving to another topic for the moment (will get back to this eventually):

Note: The latest demo app I built on this can we started with the command:

nvp planet03_ocean