Venturing into alternate blockchains: CELO network

Hello world! At the end of my latest previous article I finished in a pretty desperated state of mind I have to admit it. But, the world keeps spinning, and good or bad things will keep happening, and this is new day, etc. So here I am again, experimenting stuff 😅. But for the moment I'm a bit tired of the Binance Smart Chain, so I'm starting to check other blockchains just in case things would be different there, and the first one on my “alternate blockchains” list is CELO. Lets' see what we have there!

Automatic restart of nvp script

- Before I jump into the main topic, something I've been thinking about since a moment now is about the handling of the script processes in NVP: many times my arbitrage monitoring process died because of lost of connection (yes I have a crappy internet connection, that's another key part of the overall problem, I know…). So here is typically how this will end in that kind of situation:

2022/06/16 05:31:36 [__main__] INFO: 31 pair reserves collected in 0.1689 secs 2022/06/16 05:34:00 [__main__] INFO: Block age: 1.033694 2022/06/16 05:34:00 [__main__] INFO: 18 pair reserves collected in 0.1519 secs 2022/06/16 05:35:27 [__main__] INFO: Block age: 1.271724 2022/06/16 05:36:21 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002357E7325F0>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:34 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x0000023519043310>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:36 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x0000023519042380>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:38 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x0000023519043310>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:40 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA5E40>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:42 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA5CF0>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:44 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA6050>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:46 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA4B80>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:36:59 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA7A60>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) 2022/06/16 05:37:01 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'getDualReserves' (on 0x...): HTTPSConnectionPool(host='bsc-dataseed1.binance.org', port=443): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x000002351BBA7D90>: Failed to establish a new connection: [Errno 11001] getaddrinfo failed')) Traceback (most recent call last): File "D:\Projects\NervHome\nvh\crypto\blockchain\arbitrage_manager.py", line 975, in <module> comp.run() File "D:\Projects\NervProj\nvp\nvp_component.py", line 81, in run res = self.process_command(cmd) File "D:\Projects\NervHome\nvh\crypto\blockchain\arbitrage_manager.py", line 930, in process_command self.monitor_arbitrages(chain) File "D:\Projects\NervHome\nvh\crypto\blockchain\arbitrage_manager.py", line 124, in monitor_arbitrages result = self.handle_arbitrage_setups() File "D:\Projects\NervHome\nvh\crypto\blockchain\arbitrage_manager.py", line 275, in handle_arbitrage_setups pair_reserves, block_ref = self.get_all_reserves(paddrs) File "D:\Projects\NervHome\nvh\crypto\blockchain\arbitrage_manager.py", line 760, in get_all_reserves result = self.dual_reserves_sc.call_function("getDualReserves", paddrs) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_smart_contract.py", line 139, in call_function self.throw("Cannot execute SmartContract function %s correctly", fname) File "D:\Projects\NervProj\nvp\nvp_object.py", line 73, in throw raise NVPCheckError(fmt % args) nvp.nvp_object.NVPCheckError: Cannot execute SmartContract function getDualReserves correctly 2022/06/16 05:37:04 [nvp.communication.email_handler] INFO: Should send the email message <p style="color: #fd0202;">**WARNING:** an exception occured in the following command:</p><p><em>['D:\\Projects\\NervProj\\.pyenvs\\bsc_env\\python.exe', 'D:\\Projects\\NervHome/nvh/crypto/blockchain/arbitrage_manager.py', 'monitor-arbs']</em></p><p>cwd=None</p><p >=> Check the logs for details.</p> 2022/06/16 05:37:06 [nvp.components.runner] ERROR: Error occured in script command: ['D:\\Projects\\NervProj\\.pyenvs\\bsc_env\\python.exe', 'D:\\Projects\\NervHome/nvh/crypto/blockchain/arbitrage_manager.py', 'monitor-arbs'] (cwd=None)

- ⇒ I should really do something about that: like restarting the script automatically when this happens for instance.

- At the same time I think I also need to improve on how I'm collecting/displaying the outputs from my script: here is a page describing how one could collect both stdout and stderr stream dynamically with additional threads (so I'm trying that too at the same time): https://stackoverflow.com/questions/31833897/python-read-from-subprocess-stdout-and-stderr-separately-while-preserving-order

- Okay, I think I'm almost there for the subprocess outputs handling part, so here is my updated

execute()method:def execute(self, cmd, **kwargs): """Execute a command optionally displaying the outputs.""" verbose = kwargs.get("verbose", True) cwd = kwargs.get("cwd", None) env = kwargs.get("env", None) check = kwargs.get("check", True) outfile = kwargs.get("outfile", None) print_outputs = kwargs.get("print_outputs", True) output_buffer = kwargs.get("output_buffer", None) # stdout = None if verbose else subprocess.DEVNULL # stderr = None if verbose else subprocess.DEVNULL stdout = subprocess.PIPE if verbose else subprocess.DEVNULL stderr = subprocess.PIPE if verbose else subprocess.DEVNULL if outfile is not None: stdout = outfile # cf. https://stackoverflow.com/questions/31833897/ # python-read-from-subprocess-stdout-and-stderr-separately-while-preserving-order def reader(pipe, queue, id): """Reader function for a stream""" try: with pipe: for line in iter(pipe.readline, b''): queue.put((id, line.decode('utf-8'))) finally: queue.put(None) # logger.info("Executing command: %s", cmd) try: proc = subprocess.Popen(cmd, stdout=stdout, stderr=stderr, cwd=cwd, env=env, bufsize=1) if verbose and (print_outputs or outfile is not None): myq = Queue() Thread(target=reader, args=[proc.stdout, myq, 0]).start() Thread(target=reader, args=[proc.stderr, myq, 1]).start() for _ in range(2): for _source, line in iter(myq.get, None): if print_outputs: # print(f"{source}: {line.strip()}") print(line.strip()) if output_buffer is not None: output_buffer.append(line.strip()) if outfile is not None: outfile.write(line) logger.info("Waiting for subprocess to finish...") proc.wait() logger.info("Returncode: %d", proc.returncode) if check and proc.returncode < 0: raise NVPCheckError(f"Subprocess terminated with error code {proc.returncode} (cmd={cmd})") # if check: # subprocess.check_call(cmd, stdout=stdout, stderr=stderr, cwd=cwd, env=env) # else: # subprocess.run(cmd, stdout=stdout, stderr=stderr, cwd=cwd, env=env, check=False) except KeyboardInterrupt: logger.info("Subprocess was interrupted.")

- Now I seem to have a remaining small issue in some cases when starting a some process since I get the outputs:

$ nvp arbman monitor-arbs 2022/06/16 09:19:02 [py.warnings] WARNING: D:\Projects\NervProj\tools\windows\python-3.10.1\lib\subprocess.py:956: RuntimeWarning: line buffering (buffering=1) isn't supported in binary mode, the default buffer size will be used self.stdout = io.open(c2pread, 'rb', bufsize) 2022/06/16 09:19:02 [py.warnings] WARNING: D:\Projects\NervProj\tools\windows\python-3.10.1\lib\subprocess.py:961: RuntimeWarning: line buffering (buffering=1) isn't supported in binary mode, the default buffer size will be used self.stderr = io.open(errread, 'rb', bufsize) 2022/06/16 09:19:08 [nvh.crypto.blockchain.evm_blockchain] INFO: Keeping 1123413/1143549 quotable pairs.

- I'm wondering what this is… 🤔 ⇒ Ahh okay, that's the

bufsize=1i'm passing to popen: according to the doc (https://docs.python.org/3/library/subprocess.html):- 0 means unbuffered (read and write are one system call and can return short)

- 1 means line buffered (only usable if universal_newlines=True i.e., in a text mode)

- any other positive value means use a buffer of approximately that size

- negative bufsize (the default) means the system default of io.DEFAULT_BUFFER_SIZE will be used.

- So let's try with 0 or default value instead… ⇒ OK, this works fine.

- And now I'm also returning the success state from the execute command, and with that I can implement the “auto restart feature” in the runner as follow:

# Execute that command: logger.debug("Executing script command: %s (cwd=%s)", cmd, cwd) auto_restart = desc.get("auto_restart", False) notify = self.config.get("notify_script_errors", True) while True: success, rcode, outputs = self.execute(cmd, cwd=cwd, env=env) if not success: outs = "\n".join(outputs) logger.error( "Error occured in script command:\ncmd=%s\ncwd=%s\nreturn code=%s\nlastest outputs:\n%s", cmd, cwd, rcode or "None", outs) if not success and notify: # And exception occured in the sub process, so we should send a notification: msg = ":warning: **WARNING:** an exception occured in the following command:\n" msg += f"{cmd}\n" msg += f"cwd={cwd}\n\n" msg += "=> Check the logs for details." rchat = self.get_component("rchat") rchat.send_message(msg, channel="problem-reports") msg = "<p style=\"color: #fd0202;\">**WARNING:** an exception occured in the following command:</p>" msg += f"<p><em>{cmd}</em></p>" msg += f"<p>cwd={cwd}</p>" msg += "<p >=> Check the logs for details.</p>" email = self.get_component("email") email.send_message("[NervProj] Exception notification", msg) if success or not auto_restart: break

- Finally, I just added a new super simple script to start the monitoring process on bsc:

"monitor_bsc_arbs": { "custom_python_env": "bsc_env", "auto_restart": true, "log_file": "${PROJECT_ROOT_DIR}/monitor_bsc_arbs.log", "cmd": "${PYTHON} ${PROJECT_ROOT_DIR}/nvh/crypto/blockchain/arbitrage_manager.py monitor-arbs -c bsc", "python_path": ["${PROJECT_ROOT_DIR}", "${NVP_ROOT_DIR}"] },

Initial checks on the blockchain

- Now we can finally start on this new blockchain

Actually I've already been doing some preliminary tests:

- I built a config entry for this new “celo” blockchain,

- I'm definiting a dynamic component for it, based on the generic EVMBlockchain component:

"celo_chain": "nvh.crypto.blockchain.evm_blockchain:celo",

- And then I could start retrieving blocks in jupyter:

from nvp.nvp_context import NVPContext import numpy as np from hexbytes import HexBytes import matplotlib.pyplot as plt if NVPContext.instance is None: NVPContext() ctx = NVPContext.get() chain = ctx.get_component('celo_chain') db = chain.get_db() bck = chain.get_block('latest', full_tx=True) # bck = chain.get_block('pending', full_tx=True) # print(f"Current timestamp: {chain.get_timestamp()}") bnum = bck['number'] ts = bck['timestamp'] ntx = len(bck["transactions"]) print(f"Block number: {bnum}, timestamp: {ts}, num tx: {ntx}") bck

- ⇒ Naturally, the block structure seems to be the same as on BSC (or Ethereum)

- Then I was manually collecting some blocks:

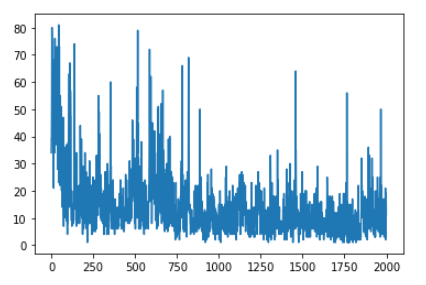

nblocks = 2000 blocks = [] bck = chain.get_block('latest') start_bnum = bck['number']-nblocks+1 for i in range(nblocks): bck = chain.get_block(start_bnum+i, full_tx=True) ntx = len(bck['transactions']) if (i+1)%100==0: print(f"{(i+1)*100/2000}% : Collected block {start_bnum+i} with {ntx} tx") blocks.append(bck) - And from that, plotted the number of transactions in each of those blocks:

num_txs = [len(bck['transactions']) for bck in blocks] plt.plot(num_txs)

- Not so much happening there, but it's that bad either.

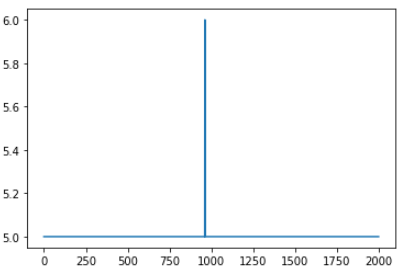

- Next I plotted the timedelta between 2 consecutive blocks, and surprisingly, we can notice there that we always have a time diff of 5 seconds:

times = [bck['timestamp'] for bck in blocks] times = np.array(times) plt.plot(times[1:]-times[:-1])

- Then I continued searching for the routers similar to uniswapV2 on the blockchain and found 3 of them:

Total tx count: 28661 Router tx counts: {'0xE3D8bd6Aed4F159bc8000a9cD47CffDb95F96121': 664, '0x1421bDe4B10e8dd459b3BCb598810B1337D56842': 510, '0x7D28570135A2B1930F331c507F65039D4937f66c': 10} - ⇒ We don't have much transactions on those routers: 2.31% / 1.78% and a ridiculous 0.0349% lol

Computing the gas price on CELO

- Next let's check if I can compute the gas price from that blockchain:

$ nvp bchain collect-gas-price -c celo 2022/06/16 21:00:33 [nvh.crypto.blockchain.evm_blockchain] INFO: Block 13566077: num_tx=5, mode=0.550, mean=0.211, dev=0.190

- Cool, this works out of the box!

- ⇒ So I should update my cron script to perform that task too: OK

- And I could add an additional page in my cryptoview app to report the CELO stats: OK

- And while I'm at it, I should also add support to back the celo_chain database: OK

Collecting and storing block data

- With my previous experiments above, what I realized was that I should in fact try to keep a record of the blocks/transactions on a given chain for a given period that could be considered relevant (multiple months ?)

- Now, that's a lot of data to handle, but I think I have options to store that in a compact form:

- For a block, I only really need the block number/timestamp/miner address/size/total_difficulty/number of transactions/gas used

- ie. No need for complex hex data storage here!

- For the miner address, I'm thinking I should built a dedicated table to map an address to a unique identifier, and then I would use that identifier everywhere else.

- Same ideas for the transactions, I only need for each transaction: the block number/transaction index/the from address/to address/gas/gas price/input/nonce/value

- Now for the input data from each transaction I could also use an identifier for the signature (which could just be itself, since that's an uint32 value)

I just found this website with a collection of signatures from Ethereum blockchain: https://www.4byte.directory/signatures/

- So let's prepare the tables:

SQL_CREATE_ADDRESS_TABLE = """ CREATE TABLE IF NOT EXISTS addresses ( id SERIAL PRIMARY KEY, address char(42) NOT NULL UNIQUE ); """ SQL_INSERT_ADDRESS = """ INSERT INTO addresses(address) VALUES(%s); """

- And then this got me thinking: here I would store 42 bytes per address, while an EVM address is really only just 20 bytes ⇒ I will save roughly half of the storage space if I store addresses as a triplet of (bigint (8bytes), bigint, int (4bytes)), so I will rather use the following schema:

SQL_CREATE_ADDRESSES_TABLE = """ CREATE TABLE IF NOT EXISTS addresses ( id SERIAL PRIMARY KEY, a1 BIGINT NOT NULL, a2 BIGINT NOT NULL, a3 INT NOT NULL, UNIQUE (a1, a2, a3) ); """ SQL_INSERT_ADDRESS = """ INSERT INTO addresses(address) VALUES(%s,%s,%s); """

- Then for the blocks I will use:

SQL_CREATE_BLOCKS_TABLE = """ CREATE TABLE IF NOT EXISTS blocks ( number INTEGER PRIMARY KEY, timestamp INTEGER, miner_id INTEGER, size INTEGER, difficulty INTEGER, tx_count SMALLINT, gas_used INTEGER ); """ SQL_INSERT_BLOCK = """ INSERT INTO blocks(number,timestamp,miner,size,difficulty,tx_count,gas_used) VALUES(%s,%s,%s,%s,%s,%s,%s); """

- And for the transactions:

SQL_CREATE_TRANSACTIONS_TABLE = """ CREATE TABLE IF NOT EXISTS transactions ( id SERIAL PRIMARY KEY, block_number INTEGER, tx_index SMALLINT, from_id INTEGER, to_id INTEGER, gas INTEGER, gas_price INTEGER, nonce INTEGER, value INTEGER, operation_type SMALINT, sig INTEGER, registers BYTEA ); """ SQL_INSERT_TRANSACTION = """ INSERT INTO transactions(block_number,tx_index,from_id,to_id,gas,gas_price,nonce,value,operation_type,sig,registers) VALUES(%s,%s,%s,%s,%s,%s,%s,%s,%s,%s,%s); """

- For the transactions I will introduce a “operation_type” field which will be populated in case I know what kind of action is done in that tx: if this is the case, the “registers” could be set to NULL. the operation_type with refer to an operation name table, and then for each operation name that we know we will have a dedicated table to store the details of that operation, including the transaction id.

- Actually, maybe I should even store the “registers” into a separate table where I map a transaction id to that register only when not null ? ⇒ Naa, will not help much I think.

- And now let's create a method to collect the new blocks…

- Note: many of my classes are becoming too big… so, in an attempt to fix that, I'm now adding the support to delegate some work to dynamically loaded “handlers” from the NVPContext:

def get_handler(self, hname): """Get a handler by name""" if hname in self.handlers: return self.handlers[hname] # otherwise we have to search for that handler: comp_module = import_module(hname) handler = comp_module.handle self.handlers[hname] = handler return handler def call_handler(self, hname, *args, **kwargs): """Call a given handler with arguments""" handler = self.get_handler(hname) return handler(*args, **kwargs)

- Basically an handler will be a simple python script with a function called handle() in it.

- I can then give the full path to that module to load/call it dynamically.

- Or…🤔 In fact, let's also quickly add support for aliases in this… Or, no: after some more thinking I'm rather build a dedicated default support to find “handlers” specific to a given component in a sub folder called 'handlers' from where the component itself is defined:

def handle(self, hname, *args, **kwargs): """Call an handler specific to this component, should be found in a sub folder called 'handlers'""" return self.call_handler(f"{self.handlers_path}.{hname}", self, *args, **kwargs) - With the handlers_path being defined on construction of the component by the NVPContext (

set_contrust_frame()is called inNVPContext.create_component()now):def set_construct_frame(self, frame): """Assign a construct frame to this component""" self.construct_frame = frame # setup the default handlers path: parts = self.construct_frame["module"].split(".") # Replace the last name on the module path with "handlers" parts[-1] = "handlers" self.handlers_path = ".".join(parts)

- With that in place, the following syntax should work just fine (in BlockchainManager in this case):

if cmd == "collect.blocks": chain_name = self.get_param("chain") chain: EVMBlockchain = self.get_component(f"{chain_name}_chain") chain.handle("collect_evm_blocks") return True

- Yes! and indeed this works as expected 👍:

$ nvp bchain collect blocks 2022/06/17 08:40:18 [nvh.crypto.blockchain.handlers.collect_evm_blocks] INFO: Should collect blocks here on chain bsc

- Now let's add some content in there…

- First significant step is to store the addresses as triplet of values: here is what I created for that in ChainDB:

def get_address_id(self, addr): """Convert an address into an id""" self.check(len(addr) == 42, "Invalid address %s", addr) # Extract the relevant parts: # we will store 8bytes / 8bytes / 4bytes elements: # each byte takes 2 hex digits so thats 16 chars / 16 chars / 8 chars (excluding the '0x' prefix) addr = addr.lower() # We need to convert from uint to int values below: a1 = utl.to_int64(int(addr[2:18], 16)) a2 = utl.to_int64(int(addr[18:34], 16)) a3 = utl.to_int32(int(addr[34:], 16)) # Check if we already have that entry in the DB: cur = self.sql_db.execute("SELECT id from addresses WHERE a1=%s AND a2=%s AND a3=%s;") row = cur.fetchone() if row is not None: return row[0] # Address not found: we insert it: sql = "INSERT INTO addresses(a1,a2,a3) VALUES(%s,%s,%s) RETURNING id;" cur = self.sql_db.execute(sql, (a1, a2, a3), commit=True) row = cur.fetchone() addr_id = row[0] logger.info("Inserting address %s with id %d", addr, addr_id) return addr_id

- Tested on jupyter and it seems to work fine:

2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0xfcb5df42e06a39e233dc707bb3a80311efd11576 with id 101 Got ID: 101 2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0xed78e6efaabfba76c43c40ea99003325505e1869 with id 102 Got ID: 102 2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0xc67687524ab947bf41f35434971c817fa3663413 with id 103 Got ID: 103 2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0xdb0dec74e23394d1883e2ee859db98d6fe357695 with id 104 Got ID: 104 2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0x721c5ba18d3cddef5b94c45725f860a830dd067f with id 105 Got ID: 105 2022/06/17 09:45:55 [nvh.crypto.blockchain.chain_db] INFO: Inserting address 0x897fe07d613037c206429628611a2665e18c657d with id 106 Got ID: 106

- Now I need the opposite: getting an address from an id:

def get_address(self, addr_id): """Retrieve an addres from a given id""" cur = self.sql_db.execute("SELECT a1,a2,a3 from addresses WHERE id=%s;", (addr_id,)) row = cur.fetchone() if row is None: # No address found: logger.info("No address found with id %d", addr_id) return None # We convert back to uint then to hex: a1 = hex(utl.to_uint64(row[0])) a2 = hex(utl.to_uint64(row[1]))[2:] a3 = hex(utl.to_uint32(row[2]))[2:] # Return the reconstructed address: return f"{a1}{a2}{a3}"

- And this works too:

db.get_address(102) '0xed78e6efaabfba76c43c40ea99003325505e1869'

- So is my first working version for this collect_evm_blocks handler:

"""Handler function used to collect new blocks on a given chain""" import logging from nvh.crypto.blockchain.chain_db import ChainDB from nvh.crypto.blockchain.evm_blockchain import EVMBlockchain logger = logging.getLogger("collect_evm_blocks") def handle(chain: EVMBlockchain): """Handler function entry point""" # logger.info("Should collect blocks here on chain %s", chain.get_short_name()) sql = chain.get_db() # Get the latest block: last_block = chain.get_block("latest", full_tx=True) # Check what is the last block in the database: last_stored_bnum = sql.get_last_block_number() logger.info("Last stored block number is: %s", last_stored_bnum) if last_stored_bnum is None: # Then we simply store the current block: process_block(sql, last_block) else: # Process all the blocks from last_stored_bnum+1 to the last_block end_bnum = last_block["number"] cur_bnum = last_stored_bnum + 1 count = end_bnum - cur_bnum idx = 1 while cur_bnum < end_bnum: bck = chain.get_block(cur_bnum, full_tx=True) process_block(sql, bck, f"{idx*100.0/count:.2f}%: ") cur_bnum += 1 idx += 1 # Finally we process the final block: process_block(sql, last_block, "100%: ") def process_block(sql: ChainDB, bck, prefix=""): """Process a given block to store it in the DB""" # Get the info we want from the block itself: # (number,timestamp,miner,size,difficulty,tx_count,gas_used) txs = bck["transactions"] logger.info("%sBlock %d, %d txs", prefix, bck["number"], len(txs)) keylist = ("number", "timestamp", "size", "difficulty") desc = {key: bck[key] for key in keylist} desc["gas_used"] = bck["gasUsed"] desc["tx_count"] = len(txs) # **Note** we also need to retrive the id for the miner: desc["miner_id"] = sql.get_address_id(bck["miner"]) logger.debug("Should store block desc: %s", desc) sql.insert_blocks([desc]) process_transactions(sql, txs) def process_transactions(sql: ChainDB, txs): """Process a list of transactions""" descs = [] for trans in txs: txinput = trans["input"][2:] sig = "" registers = "" if len(txinput) >= 8: sig = txinput[:8] registers = txinput[8:] # Note: the to address may be null if we are creating a contract: to_id = trans["to"] if to_id is not None: to_id = sql.get_address_id(to_id) descs.append( { "block_number": trans["blockNumber"], "tx_index": trans["transactionIndex"], "from_id": sql.get_address_id(trans["from"]), "to_id": to_id, "gas": trans["gas"], "gas_price": trans["gasPrice"], "nonce": trans["nonce"], "value": trans["value"], "operation_type": 0, "sig": sig if len(sig) > 0 else None, "registers": registers if len(registers) > 0 else None, } ) # for desc in descs: # logger.info("Inserting tx: %s", desc) # sql.insert_txs([desc]) # logger.debug("Inserting %d transactions", len(descs)) sql.insert_txs(descs)

- The problem with this script is that if I run it on a regular basis with cron, I may still eventually face a situation where the previous run is not done yet (trying to catch up retrieving blocks) and I'm trying to run a new instance of the script…

- ⇒ So I'm thinking I should add a protection layer on top of that with a file lock in the runner to prevent dual scripts execution.

- OK: I can now specify a lock file for my scripts:

"bchain": { "lock_file": "${PROJECT_ROOT_DIR}/data/locks/bchain.lock", "custom_python_env": "bsc_env", "cmd": "${PYTHON} ${PROJECT_ROOT_DIR}/nvh/crypto/blockchain/blockchain_manager.py", "python_path": ["${PROJECT_ROOT_DIR}", "${NVP_ROOT_DIR}"] },

- but now I use to create separated scripts for the bsc/eth/celo chains for that operation, and now calling these in my 5mins cron job:

lfile="${log_dir}/collect_bsc_blocks.log" nvp collect_bsc_blocks 2>&1 | tee -a $lfile lfile="${log_dir}/collect_eth_blocks.log" nvp collect_eth_blocks 2>&1 | tee -a $lfile lfile="${log_dir}/collect_celo_blocks.log" nvp collect_celo_blocks 2>&1 | tee -a $lfile

Collecting pairs on celo

- Now that I've got my automatic block data retrieval in place, I can get back to the routers I discovered in the first part of this post: I should try and get all the pairs available on them.

- Side note: now I actually have much more blocks/transactions available to perform the data mining:

nblocks = db.get_num_blocks() nblocks output: 12555 ntx = db.get_num_transactions() ntx output: 146829

- And so starting with my list of router function signatures, I could collect all the “to_id” from the transactions where one of those signatures is used:

sql = "SELECT to_id from transactions WHERE sig = ANY(%s);" cur = db.execute(sql, (sigs_i32,)) rows = cur.fetchall() ids = [row[0] for row in rows] id_counts = {} for to_id in ids: id_counts[to_id] = id_counts.get(to_id,0)+1 id_counts output: {305: 608, 313: 817, 570: 48}

- Then converting those IDs back to addresses:

# Get the addresses for those IDs: routers = [] for addid in id_counts.keys(): routers.append(chain.get_address(addid)) routers output: ['0xE3D8bd6Aed4F159bc8000a9cD47CffDb95F96121', '0x1421bDe4B10e8dd459b3BCb598810B1337D56842', '0x7D28570135A2B1930F331c507F65039D4937f66c']

- And these are exactly the addresses I found initially manually digging into the blocks data, so all good 👍!

- Now I start creating the config for those dexes:

- Checking 0x1421bDe4B10e8dd459b3BCb598810B1337D56842:

- From factory contract we see that the pairs do have support for flashloans in the swap function OK

- And the swap fee points should be set to 30

- Testing retrieval of the pairs from that first dex… arrff, not quite there yet 🙃:

$ nvp bchain update-pairs -c celo celoswap1 2022/06/18 08:11:04 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x62d5b84bE28a183aBB507E125B384122D2C25fAE 2022/06/18 08:11:10 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x1421bDe4B10e8dd459b3BCb598810B1337D56842 2022/06/18 08:11:15 [__main__] INFO: Updating pairs on CeloSwap1 2022/06/18 08:11:15 [nvh.crypto.blockchain.uniswap_base] INFO: Initial number of pairs: 0 2022/06/18 08:11:15 [nvh.crypto.blockchain.uniswap_base] INFO: Adding 660 pairs to CeloSwap1 2022/06/18 08:11:16 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x1E593F1FE7B61c53874B54EC0c59FD0d5eb8621e 2022/06/18 08:11:21 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x1E593F1FE7B61c53874B54EC0c59FD0d5eb8621e 2022/06/18 08:11:21 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x471EcE3750Da237f93B8E339c536989b8978a438 2022/06/18 08:11:26 [nvh.crypto.blockchain.erc20_token] ERROR: Exception occured while trying to initiate token at 0x471EcE3750Da237f93B8E339c536989b8978a438: Invalid function with name name 2022/06/18 08:11:26 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x471EcE3750Da237f93B8E339c536989b8978a438 (???) 2022/06/18 08:11:27 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x765DE816845861e75A25fCA122bb6898B8B1282a 2022/06/18 08:11:32 [nvh.crypto.blockchain.erc20_token] ERROR: Exception occured while trying to initiate token at 0x765DE816845861e75A25fCA122bb6898B8B1282a: Invalid function with name name 2022/06/18 08:11:32 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x765DE816845861e75A25fCA122bb6898B8B1282a (???) 2022/06/18 08:11:32 [nvh.crypto.blockchain.chain_db] INFO: Replacing symbol ??? with ???#2 2022/06/18 08:11:32 [nvh.crypto.blockchain.uniswap_base] INFO: 0/660: Added pair on ???/???#2 2022/06/18 08:11:33 [nvh.crypto.blockchain.uniswap_base] INFO: Iteration 0: CPU usage: 0.00%, RAM usage: 89.28 MB 2022/06/18 08:11:33 [nvh.crypto.blockchain.evm_smart_contract] INFO: Writing ABI file for contract 0x7B7b05A80b6041cD039C4e487f269f47A776e396 2022/06/18 08:11:38 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x7B7b05A80b6041cD039C4e487f269f47A776e396 Traceback (most recent call last): File "D:\Projects\NervHome\nvh\crypto\blockchain\blockchain_manager.py", line 447, in <module> comp.run() File "D:\Projects\NervProj\nvp\nvp_component.py", line 93, in run res = self.process_command(cmd) File "D:\Projects\NervProj\nvp\nvp_component.py", line 83, in process_command return self.process_cmd_path(self.ctx.get_command_path()) File "D:\Projects\NervHome\nvh\crypto\blockchain\blockchain_manager.py", line 112, in process_cmd_path dex.update_pairs() File "D:\Projects\NervHome\nvh\crypto\blockchain\uniswap_base.py", line 393, in update_pairs pair = self.chain.get_pair(paddr, pool_id=idx, ex_id=self.get_id()) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 891, in get_pair pair = ERC20Pair(chain=self, address=addr, desc=desc, ex_id=ex_id, pool_id=pool_id) File "D:\Projects\NervHome\nvh\crypto\blockchain\erc20_pair.py", line 52, in __init__ self.t0 = self.chain.get_token(t0addr) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 809, in get_token token = self.create_token(address=addr, desc=desc) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 774, in create_token return ERC20Token(chain=self, address=address, desc=desc) File "D:\Projects\NervHome\nvh\crypto\blockchain\erc20_token.py", line 36, in __init__ self.contract = self.chain.get_contract(self.addr, abi_file) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 468, in get_contract contract = EVMSmartContract(self, address, abi_file) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_smart_contract.py", line 34, in __init__ abi_file = self.download_abi() File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_smart_contract.py", line 64, in download_abi self.check(msg[0:2] == "OK", "Cannot retrieve contract ABI: %s (url=%s)", msg, url) File "D:\Projects\NervProj\nvp\nvp_object.py", line 73, in check raise NVPCheckError(fmt % args) nvp.nvp_object.NVPCheckError: Cannot retrieve contract ABI: Contract source code not verified (url=https://explorer.celo.org/api?module=contract&action=getabi&address=0x39d6477522eB543d750AF82537325FB2930c1Aa6) 2022/06/18 08:11:40 [nvp.nvp_object] ERROR: Subprocess terminated with error code 1 (cmd=['D:\\Projects\\NervProj\\.pyenvs\\bsc_env\\python.exe', 'D:\\Projects\\NervHome/nvh/crypto/blockchain/blockchain_manager.py', 'update-pairs', '-c', 'celo', 'celoswap1']) 2022/06/18 08:11:40 [nvp.components.runner] ERROR: Error occured in script command: cmd=['D:\\Projects\\NervProj\\.pyenvs\\bsc_env\\python.exe', 'D:\\Projects\\NervHome/nvh/crypto/blockchain/blockchain_manager.py', 'update-pairs', '-c', 'celo', 'celoswap1'] cwd=None return code=1 lastest outputs: pair = self.chain.get_pair(paddr, pool_id=idx, ex_id=self.get_id()) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 891, in get_pair pair = ERC20Pair(chain=self, address=addr, desc=desc, ex_id=ex_id, pool_id=pool_id) File "D:\Projects\NervHome\nvh\crypto\blockchain\erc20_pair.py", line 52, in __init__ self.t0 = self.chain.get_token(t0addr) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 809, in get_token token = self.create_token(address=addr, desc=desc) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 774, in create_token return ERC20Token(chain=self, address=address, desc=desc) File "D:\Projects\NervHome\nvh\crypto\blockchain\erc20_token.py", line 36, in __init__ self.contract = self.chain.get_contract(self.addr, abi_file) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_blockchain.py", line 468, in get_contract contract = EVMSmartContract(self, address, abi_file) File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_smart_contract.py", line 34, in __init__ abi_file = self.download_abi() File "D:\Projects\NervHome\nvh\crypto\blockchain\evm_smart_contract.py", line 64, in download_abi self.check(msg[0:2] == "OK", "Cannot retrieve contract ABI: %s (url=%s)", msg, url) File "D:\Projects\NervProj\nvp\nvp_object.py", line 73, in check raise NVPCheckError(fmt % args) nvp.nvp_object.NVPCheckError: Cannot retrieve contract ABI: Contract source code not verified (url=https://explorer.celo.org/api?module=contract&action=getabi&address=0x39d6477522eB543d750AF82537325FB2930c1Aa6)

- First noticeable error here is this:

2022/06/18 08:11:26 [nvh.crypto.blockchain.erc20_token] ERROR: Exception occured while trying to initiate token at 0x471EcE3750Da237f93B8E339c536989b8978a438: Invalid function with name name

- Ahhh, I see… this is actually a proxy contract, presenting only two public functions:

_getOwner()and_getImplementation(), with the later returning the address of the target implementation for that token. - Hmmm, actually the problem is simply that I'm not providing a default ERC20 abi file yet on celo with that part of the code:

if abi_file is None: abi_file = self.chain.get_token_abi_filename() - OK Now it's working better:

$ nvp bchain update-pairs -c celo celoswap1 2022/06/18 08:37:09 [__main__] INFO: Updating pairs on CeloSwap1 2022/06/18 08:37:09 [nvh.crypto.blockchain.uniswap_base] INFO: Initial number of pairs: 0 2022/06/18 08:37:09 [nvh.crypto.blockchain.uniswap_base] INFO: Adding 660 pairs to CeloSwap1 2022/06/18 08:37:09 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x1E593F1FE7B61c53874B54EC0c59FD0d5eb8621e 2022/06/18 08:37:10 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x471EcE3750Da237f93B8E339c536989b8978a438 (CELO) 2022/06/18 08:37:10 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x765DE816845861e75A25fCA122bb6898B8B1282a (cUSD) 2022/06/18 08:37:10 [nvh.crypto.blockchain.uniswap_base] INFO: 0/660: Added pair on CELO/cUSD 2022/06/18 08:37:10 [nvh.crypto.blockchain.uniswap_base] INFO: Iteration 0: CPU usage: 0.00%, RAM usage: 88.96 MB 2022/06/18 08:37:11 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x7B7b05A80b6041cD039C4e487f269f47A776e396 2022/06/18 08:37:11 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x39d6477522eB543d750AF82537325FB2930c1Aa6 (KEY) 2022/06/18 08:37:11 [nvh.crypto.blockchain.uniswap_base] INFO: 1/660: Added pair on KEY/CELO 2022/06/18 08:37:11 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x68f5ad364Aa0fc13a5FAdE9Ddf6BadA7DFCB026c 2022/06/18 08:37:12 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x041954F3f34422aF8d1f11fD743f3A1B70C30271 (pUSD) 2022/06/18 08:37:12 [nvh.crypto.blockchain.uniswap_base] INFO: 2/660: Added pair on pUSD/CELO 2022/06/18 08:37:12 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0xa2e63c3b9D027270Ff5892A552aaac50515ee61D 2022/06/18 08:37:12 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0x39049C02A56c3ecd046F6c2A9BE0CffA2bc29C08 (MCRS) 2022/06/18 08:37:13 [nvh.crypto.blockchain.uniswap_base] INFO: 3/660: Added pair on MCRS/CELO 2022/06/18 08:37:13 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0x5724CcCFef36B20250817Aa28E0D68707bDd0D9A 2022/06/18 08:37:13 [nvh.crypto.blockchain.evm_blockchain] INFO: Adding new token 0xF06768797ba4e86abFa5aDFBFD223742F2657960 (XGP) 2022/06/18 08:37:13 [nvh.crypto.blockchain.uniswap_base] INFO: 4/660: Added pair on CELO/XGP 2022/06/18 08:37:14 [nvh.crypto.blockchain.erc20_pair] INFO: Registering pair at 0xA413103064E6Fb32744f39Cf3B9D16168ded7590

- OK ⇒ Got the 660 pairs without issue.

- Now checking 0xE3D8bd6Aed4F159bc8000a9cD47CffDb95F96121

- Ohh shit… that router is actually pointing to the same Factory address as the previous one (0x62d5b84bE28a183aBB507E125B384122D2C25fAE) 😰 So, there is no point in creating another Dex entry for that one.

- Now checking 0x7D28570135A2B1930F331c507F65039D4937f66c

- Crap ⇒ That one is not verified: so let's use the ABI from another router to try to load it and get the factory address:

sc = chain.get_contract("0x7D28570135A2B1930F331c507F65039D4937f66c", abi_file="ABI/celo/0x1421bDe4B10e8dd459b3BCb598810B1337D56842.json") sc.call_function("factory") output: 2022/06/18 08:58:37 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'factory' (on 0x7D28570135A2B1930F331c507F65039D4937f66c): execution reverted 2022/06/18 08:58:39 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'factory' (on 0x7D28570135A2B1930F331c507F65039D4937f66c): execution reverted 2022/06/18 08:58:42 [nvh.crypto.blockchain.evm_smart_contract] ERROR: Exception occured while calling 'factory' (on 0x7D28570135A2B1930F331c507F65039D4937f66c): execution reverted - Arrff… doesn't work of course. But anyway, just checking some of the transactions going in this router address we see that we eventually get back to pairs from the same factory above 0x62d5b84bE28a183aBB507E125B384122D2C25fAE

- So, conclusion of this is: we only have one uniswap compatible factory on celo right now, so we won't be able to run any kind of arbitrage monitoring yet there 😅.

Studying other blockchain statistics

- So in our investigations above we discovered that the 3 uniswap routers are all linked to the same factory contract. And in the end, this means all the transactions on those routers could be counted together. So that's currently a total of 3329/228014 transactions ⇒ about 1.5% only which seems very low.

- The question I'm asking myself is then: what are the other transactions doing ? And which ones are the most significant then ? Let's try to find out.

- First, let's get the most used contracts : ie. reading the 'to_id' from all the transations:

# Get the most used contracts sql = "SELECT to_id from transactions;" cur = db.execute(sql, (sigs_i32,)) rows = cur.fetchall() ids = [row[0] for row in rows] # total number of transactions: ntx = len(ids) print(f"Number of transactions: {ntx}") # Now we group the to ids: id_counts = {} for to_id in ids: id_counts[to_id] = id_counts.get(to_id,0)+1 nsc = len(id_counts) print(f"Number of different to contracts: {nsc}") # We divide by the total number of tx to get a percentage: for key, val in id_counts.items(): id_counts[key] = 100.0*val / ntx # sort the contracts: sclist = [(key,val) for key,val in id_counts.items()] sclist.sort(key = lambda item: item[1], reverse=True) for idx, item in enumerate(sclist[:20]): addr = chain.get_address(item[0]) print(f"{addr}: {item[1]:.2f}%") - Output:

Number of transactions: 231191 Number of different to contracts: 3403 0x6A3A5F4e72C7e0258dEd54157597d4F74Fba0FF9: 32.29% 0x5e00A28B8E1B4eD59b480D46fe2ec9d2aFCA8015: 13.28% 0x00F8525ABb78Ea86233970b5fB6b95c0844d0126: 7.22% 0xa16F9f78C70A16Fdc7637Fd8B96FD69Ea6f496E3: 6.52% 0x765DE816845861e75A25fCA122bb6898B8B1282a: 5.06% 0xefB84935239dAcdecF7c5bA76d8dE40b077B7b33: 4.83% 0x4B19240BC722d2f367A2dF36Fc38AEa7b4533F5c: 3.61% 0xF35ed7156BABF2541E032B3bB8625210316e2832: 2.52% 0x7D1e0D8b0810730e85828EaE1ee1695a95eECf4B: 1.80% 0xa2bf67e12EeEDA23C7cA1e5a34ae2441a17789Ec: 1.51% 0x056d9AAC902cc2925BB31f6C516B1e1579c35df9: 1.51% 0x6A39822cb58C2C3850CDcC96Ee4d7b707772Eecc: 1.48% 0x43D7937C08af98885Dab2481fed00ee3cFc5f22c: 1.45% 0x67316300f17f063085Ca8bCa4bd3f7a5a3C66275: 1.37% 0xeEd64Ef6010a360cDAA9B05cae65901b3250EB91: 0.93% 0xAB7064715B7944110EF7138C0c55D3c4132BdFd5: 0.82% 0xE3D8bd6Aed4F159bc8000a9cD47CffDb95F96121: 0.78% 0x194D9851250Aa8F75f896a6cbb5153a3E20dAfA0: 0.74% 0x1421bDe4B10e8dd459b3BCb598810B1337D56842: 0.63% 0x7BF9DbEcb37BF634769Cc0A93fAAB2Df157128C4: 0.62%

- Lets' check some of those most used contracts:

- 0x6A3A5F4e72C7e0258dEd54157597d4F74Fba0FF9: constantly receiving hundreds of transactions but all are failing.

- but the error message seem to be related to a uniswap operation ? (revert reason: “UniswapV2Router: INSUFFICIENT_OUTPUT_AMOUNT” ?)

- No code available, so would need to decompile the bytecode to investigate this one further: not something I want to do for now.

- Note: Could install https://github.com/crytic/pyevmasm eventually ? But I feel lazy right now.

- 0x5e00A28B8E1B4eD59b480D46fe2ec9d2aFCA8015:

- In this one the same source will always send multiple transactions for a given nonce and thus replace them multiple times because a last one can be completed.

- Eventually we observe this kind of successful transactions: https://explorer.celo.org/tx/0xa862891044c5b46a028511a580612a947dd6f7776c83eb3221c065fd509cb573/token-transfers

- ⇒ It seems there is some pretty complex algorithm at play here… but I can't really understand what this is and why it's worth it.

- 0x00F8525ABb78Ea86233970b5fB6b95c0844d0126:

- Same thing for that one: it seems to be executed continuously from the same from address with small increments on the gas fee multiple times for a single nonce.

- And then I tried the opposite: finding the contracts that are generating the most transactions:

# Get the most active contracts: sql = "SELECT from_id from transactions;" cur = db.execute(sql, (sigs_i32,)) rows = cur.fetchall() ids = [row[0] for row in rows] # total number of transactions: ntx = len(ids) print(f"Number of transactions: {ntx}") # Now we group the to ids: id_counts = {} for to_id in ids: id_counts[to_id] = id_counts.get(to_id,0)+1 nsc = len(id_counts) print(f"Number of different from contracts: {nsc}") # We divide by the total number of tx to get a percentage: for key, val in id_counts.items(): id_counts[key] = 100.0*val / ntx # sort the contracts: sclist = [(key,val) for key,val in id_counts.items()] sclist.sort(key = lambda item: item[1], reverse=True) print("Most active users:") for idx, item in enumerate(sclist[:20]): addr = chain.get_address(item[0]) print(f"{addr}: {item[1]:.2f}%")

- Note: I also discovered the celoscan website in the process, available at: https://celoscan.xyz/, this is almost the same as etherscan/bscscan, so more familiar to use than https://explorer.celo.org/

- What we notice in the contracts reported from the code above is that a great lot of contracts are just automated contracts calling the same function over and over again: I feel I should not take these into account: I rather want the list of “real user contracts” so I could then see where those users are going (ie. with which contracts they are interacting)

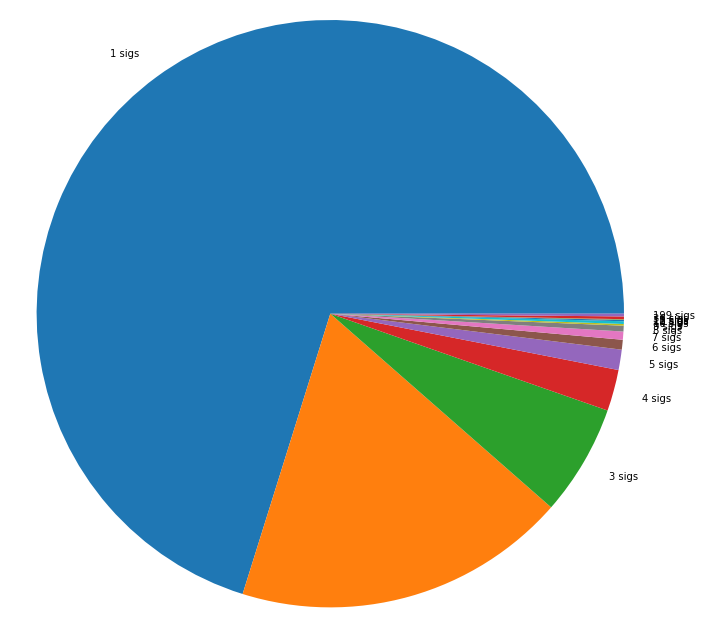

- So first let's compute how many different signatures we have for each from address:

# compute the number of unique signature for each from address: sql = "SELECT from_id,sig from transactions;" cur = db.execute(sql, (sigs_i32,)) rows = cur.fetchall() ids = [(row[0],row[1]) for row in rows] # total number of transactions: ntx = len(ids) print(f"Number of transactions: {ntx}") # Now we group the to ids / signatures: descs = {} for elem in ids: addr_id = elem[0] if addr_id not in descs: descs[addr_id] = { 'count': 1, 'sigs': set([elem[1]]) } else: descs[addr_id]['count'] += 1 descs[addr_id]['sigs'].add(elem[1]) nsc = len(descs) print(f"Number of different from contracts: {nsc}") # We divide by the total number of tx to get a percentage: for key, desc in descs.items(): desc['id'] = key desc['percent'] = desc['count']*100.0 / ntx desc['nsigs'] = len(desc['sigs']) # sort the contracts: sclist = list(descs.values()) sclist.sort(key = lambda item: item['nsigs']) # print("Users per number of sigs:") # for idx, item in enumerate(sclist[:20]): # addr = chain.get_address(item['id']) # print(f"{addr}: {item['nsigs']}") # Compute the number of contracts per number of sigs: sig_buckets = {} for desc in sclist: nsig = desc['nsigs'] sig_buckets[nsig] = sig_buckets.get(nsig,0)+1 for key, val in sig_buckets.items(): num = sig_buckets[key] percent = num*100.0/nsc print(f"{key} sig(s): {num} contracts ({percent:.2f}%)")

- Outputs:

Number of transactions: 322624 Number of different from contracts: 5368 1 sig(s): 3767 contracts (70.18%) 2 sig(s): 986 contracts (18.37%) 3 sig(s): 328 contracts (6.11%) 4 sig(s): 122 contracts (2.27%) 5 sig(s): 60 contracts (1.12%) 6 sig(s): 29 contracts (0.54%) 7 sig(s): 24 contracts (0.45%) 8 sig(s): 17 contracts (0.32%) 9 sig(s): 5 contracts (0.09%) 10 sig(s): 8 contracts (0.15%) 11 sig(s): 4 contracts (0.07%) 12 sig(s): 1 contracts (0.02%) 13 sig(s): 1 contracts (0.02%) 36 sig(s): 8 contracts (0.15%) 199 sig(s): 8 contracts (0.15%)

- Or graphically:

- I should definitely not pay too much attention to contracts calling only a single type of transaction. But I'm not quite sure about 2 tx sigs, let's see…

- So here is how I filter those contracts that are always calling only a single method out:

# filter by number of sigs: sclist = list(descs.values()) from_ids = [elem['id'] for elem in sclist if elem['nsigs']>1] # Now get the to_ids from all those from_ids: sql = "SELECT to_id from transactions WHERE from_id = ANY(%s);" cur = db.execute(sql, (from_ids,)) rows = cur.fetchall() ids = [row[0] for row in rows] # count the number of occurences for each to address: counts = {} for tid in ids: counts[tid] = counts.get(tid,0)+1 ntx = len(ids) to_ids = [(key,val) for key,val in counts.items()] to_ids.sort(key=lambda item: item[1], reverse=True) print("Most used contracts:") for elem in to_ids[:20]: addr = chain.get_address(elem[0]) percent = elem[1]*100/ntx print(f"{addr}: {percent:.2f}%") - And the outputs I get out of this is now:

0x5e00A28B8E1B4eD59b480D46fe2ec9d2aFCA8015: 19.31% 0x4B19240BC722d2f367A2dF36Fc38AEa7b4533F5c: 13.01% 0xefB84935239dAcdecF7c5bA76d8dE40b077B7b33: 9.85% 0x43D7937C08af98885Dab2481fed00ee3cFc5f22c: 6.31% 0x056d9AAC902cc2925BB31f6C516B1e1579c35df9: 5.19% 0x67316300f17f063085Ca8bCa4bd3f7a5a3C66275: 4.71% 0x194D9851250Aa8F75f896a6cbb5153a3E20dAfA0: 4.33% 0x765DE816845861e75A25fCA122bb6898B8B1282a: 4.26% 0xE3D8bd6Aed4F159bc8000a9cD47CffDb95F96121: 3.21% 0xAB7064715B7944110EF7138C0c55D3c4132BdFd5: 3.18% 0xF35ed7156BABF2541E032B3bB8625210316e2832: 3.05% 0xb90c0a9008f1bafc12C751Ae33E05Eb7E0002132: 2.53% 0xe5c3A4Dd4eD0d6329398B79B1e61228004b35060: 1.28% 0x1421bDe4B10e8dd459b3BCb598810B1337D56842: 1.27% 0x52c2c35b4f5F03d9097c702c1a90597659ec2DDa: 1.10% 0xc8Fa2DEeDD603c754846a21eDf257abbfF542458: 1.05% 0xbDb92Ca42559adc5adC20a1E4985dC7c476483be: 0.94% 0x471EcE3750Da237f93B8E339c536989b8978a438: 0.93% 0xE383394B913d7302c49F794C7d3243c429d53D1d: 0.49% 0x918146359264C492BD6934071c6Bd31C854EDBc3: 0.47%

- ⇒ This is great because it shows that the most used contract by “real people” is the router we discovered ealier with a 19% of the share which seems a realistic/appropriate share value.

- But then no idea about 0x4B19240BC722d2f367A2dF36Fc38AEa7b4533F5c: there is no code assigned to that one ?

- 0xefB84935239dAcdecF7c5bA76d8dE40b077B7b33: A “SortedOraclesProxy” contract might be part of the monorepo from here: https://github.com/celo-org/celo-monorepo/issues/3187 ?

- 0x43D7937C08af98885Dab2481fed00ee3cFc5f22c: No idea what this is (no verified code)

- 0x056d9AAC902cc2925BB31f6C516B1e1579c35df9: A “SlidingWindowOracle”, seems to come from our single factory contract…

- etc, etc…

Conclusion

- And here we are, not really in a much better position, but we could at least filter some of the transactions on CELO in different ways, and start building some kind of data mining system on the blockchain.

- Now what I'm really after for the moment are multiple DEX on a single chain to test my arbitrage system further, and unfortunately it seems CELO is not ready for that since we only have one factory contract in the end.

- Which is getting me thinking… maybe I should create my own DEX myself copying the uniswap contracts there ? 😅 But that's another story…